Automated driving is back on the industry’s front burner, a resurgence led primarily by advances in artificial intelligence and marketplace success, industry insiders said at CES 2026 in Las Vegas in early January.

Automaker interest in pursuing top-tier Level 4 and 5 automated driving cooled in recent years as the technology hit some speed bumps, OEMs backed away from mobility-service schemes and available product-development dollars largely were directed toward battery-electric vehicles instead.

But with a policy-driven slowdown in the U.S., continued cost disadvantages compared with internal-combustion-engine vehicles and a consumer base still reluctant to make the switch to full-electric vehicles in big enough numbers, industry investment has begun to flow away from BEVs and back toward automated driver-assistance system technology.

But it isn’t just the lower boil in BEV activity that is sparking renewed ADAS interest, executives told WardsAuto.

“I think automakers worldwide are seeing the benefit automated driving can bring,” said Rajat Sagar, vice president-product management for the Snapdragon Ride Pilot platform (developed with BMW) at chip and software-solutions provider Qualcomm.

Automakers in China and others such as Tesla have been deploying ever more-sophisticated ADAS capabilities as a way to entice consumers, Sagar noted. “That’s what is fueling the need for these implementations.”

Why automakers are investing more in ADAS

C.J. Finn, U.S. automotive leader for consultant PricewaterhouseCoopers, contends automakers now see being among the first to offer higher levels of automated driving as the best near-term opportunity to gain market share.

“There’s a constant fight of what’s that [next] technology where if you’re the first mover you can really capture market share,” Finn told WardsAuto. “Some thought electrification may have been it, but autonomous is that next thing ... When you move from Level 2-plus to Level 4, that’s the game changer.”

Vlad Voroninski, CEO at California startup Helm.ai, agrees the money is once again flowing into automated driving.

“OEMs [realize] the main revenue driver is going to be in assisted driving for commercial vehicles. That’s where the capital's flowing,” he said. “It is probably the No.1 topic in the boardrooms of automotive companies.”

Even in the U.S. market, there’s evidence buyers are beginning to see a real demand for the technology from consumers. Researcher AutoPacific said in July that 43% of U.S. car buyers point to hands-off semi-autonomous driving for highway use — Level 2-plus systems such as General Motors’ Super Cruise, Ford’s BlueCruise and Tesla’s Full Self Driving — as the technology they want most in their next vehicle. That marked a hefty 20-point increase from a year earlier.

A 2023 McKinsey study showed two-thirds of consumers would be willing to pay a $10,000 premium for Level 4 automated driving technology (hands off and eyes off on both highway and urban roads) on their next vehicle. Precedence Research predicts the autonomous-vehicle market could top $2 trillion annually by 2035, growing more than 35% per year between now and then.

How GenAI is helping build the momentum

Making all this possible is the rapid advancement in technology — thanks in big part to the leap in AI capability that has altered the approach to self-driving software development, and the improvements in — and proliferation of — sensor technology that is bolstering the quality and volume of data required to perfect ADAS operation. Mobileye, for example, said it collects data in real time from some 8 million vehicles globally, last year harvesting 32 billion miles (51 billion km) of data.

“What we’re doing today isn’t something we just decided to do yesterday,” said Manuela Locarno Ajayi, a product-tech executive with TomTom, noting with half the cars on the road now connected, there’s a wealth of real-world performance data available for developers to help fine-tune their software. “We’ve been trying to do it for a long time. Only now the technology is catching up to be scalable and cost-effective [and] to have this level of precision.”

TomTom, which used CES 2026 to show off its next-generation high-definition mapping for navigation systems it is supplying to Volkswagen’s CARIAD software-development group, among others, said it now can map 470,000 miles (750,000 km) per day thanks to the advances in AI technology and the growing amount of real-world data available.

“I think the technology is closer to being ready,” Jagan Rajagopalan, head of strategy and portfolio for integration and software specialist Elektrobit, said in explaining the recent surge in ADAS interest from automakers. “It’s just AI being ready; the lidar, the cameras and technology getting more standardized. In 2030, you’ll begin to see more mainstream autonomous vehicles.”

The arrival of Generative AI and development of visual language models has changed the way automated-driving software is being written and tested, industry insiders said. With VLMs, AI software now is capable of understanding and acting on image inputs, rather than just language.

“Overnight we can train [virtually] with data that no real-world data can match,” said Mobileye CEO Amnon Shashua, adding Mobileye can run billions of simulations a day.

“The models you’re seeing now are designed to be end-to-end models,” said Nakul Duggal, executive vice president and general manager of automotive for Qualcomm, meaning the software thinks more like a human driver and can analyze the scene and determine the vehicle’s path much more quickly now. “That evolution in AI has only happened in the last three to three-and-a-half years. The data has existed but the visual-language models didn’t.”

“As you train those newer models, you get much more robust outcomes,” he said. “Once you have the recipe in place, which is not easy to get to, then its [simply] a data-driven obstacle.”

In earlier attempts at solving autonomous driving, Helm.ai’s Voroninski said: “You would have a very serious ceiling on what you could do. You might never be able to offer Level 3 or Level 4 features with that approach.”

Now, with its perception stack said to function more like a human brain, there’s line of sight to the ultimate goal.

“Seventy percent of your brain is fully dedicated just to being able to perceive the world,” Voroninski said. “That’s a problem we’ve spent years solving and have effectively solved from an automotive production-grade perspective.

“We've shown that we can actually train an autonomous driving system that can do 20-minute drives in cities it's never seen before, because we have this production-grade perception stack.”

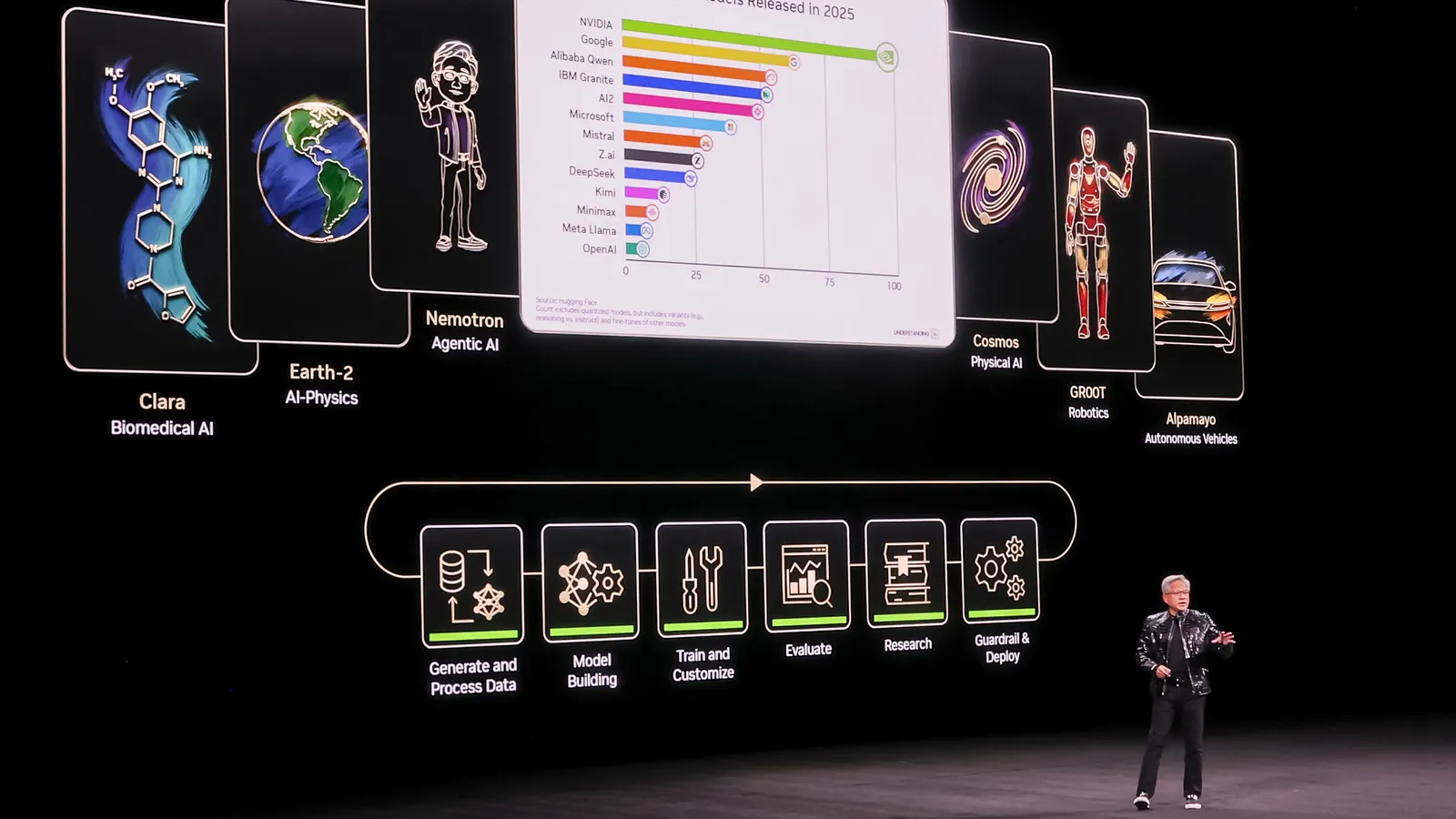

NVIDIA President CEO Jensen Huang summed it up succinctly. “You no longer program the software, you train the software,” he said, pointing to his company’s new Alpamayo open-source platform launched at CES 2026 that underpins the automated drive stack deployed on the Mercedes CLA. He says $100 trillion of global R&D has shifted to AI from classical methods of product development in the last few years.

As costs fall, ADAS could be built for the masses

While the technology is improving, costs are declining, meaning higher-end Level 2-plus capability will begin to filter down to more mass-market vehicles. Ford’s move to develop its own technology in-house is just one example of the drive to get Level 2-plus functionality into entry-level vehicles.

Mobileye, for instance, said the program for its Surround ADAS platform with a U.S. automaker beginning mid-2028 will cover up to 9 million vehicles. That comes on top of a similar program Volkswagen announced in 2025 that is expected to include up to 10 million Surround ADAS-equipped vehicles.

“This is a very cost-efficient solution,” said Nimrod Nehushtan, executive vice president-business development and strategy for Mobileye. “These are two of the top six automakers in the world that are sourcing their high-volume models with this solution. It shows there’s a trend, that there’s a lot of focus on the cutting-edge performance in the premium segments, but there is also an interesting development coming from high-volume cars.”

Qualcomm says its Ride Flex is another less costly platform gaining traction. It can combine cockpit infotainment functions and ADAS controls on a single system-on-chip, making it well-suited for lower-priced vehicles. Its first production application debuted last year on several Chinese models, including BAIC’s ARCFOX Alpha T5, General Motors’ Buick Electra L7 and Dongfeng Nissan’s N6, and Qualcomm says it has more than 10 partners with programs in the works utilizing the Ride Flex SoC, including a collaboration with Hyundai Mobis.

“What this means is, you are starting to see the implementation of ADAS and [in-vehicle infotainment] across almost every tier of vehicle,” Qualcomm’s Duggal said. No vehicle segment is being left out of the movement for Level 2-plus and above autonomy, “which increases the market size for us and everybody else,” he added.

Costs are certain to come down further as volumes rise, executives contend.

Shashua said Mobileye is targeting a 40% cost reduction for its L2-plus Surround ADAS system in 2028 and will continue to lower costs of its entire suite of technology beyond that. With its Chauffeur Level 4 software VW is deploying in its MOIA fleet, Mobileye is looking to slash costs by reducing the number of lidars required from three today to just one.

After years of promise, some developmental stumbles and on-and-off interest and investment from global automakers, it appears automated driving is gaining a solid foothold.

“I’m very certain in the next 10 years a large percentage of cars will be autonomous, or highly autonomous,” NVIDIA’s Huang predicted.

Correction: A previous version of this story misstated the number of vehicles that could receive Mobileye’s Surround ADAS platform beginning mid-2028, through a program with a U.S. automaker.